DCGANで顔生成

顔生成した.

discriminatorとgenerator

def generator_model(input_shape): input_ch = 1024 inputs = Input(shape=input_shape) x = Dense(input_ch*8*8)(inputs) x = ReLU()(x) x = Reshape((8, 8, input_ch))(x) x = BatchNormalization(momentum=0.9)(x) x = ReLU()(x) x = Deconv2D(input_ch, (4, 4), strides=(2, 2), padding="SAME")(x) x = BatchNormalization(momentum=0.9)(x) x = ReLU()(x) x = Deconv2D(input_ch//2, (4, 4), strides=(2, 2), padding="SAME")(x) x = BatchNormalization(momentum=0.9)(x) x = ReLU()(x) x = Deconv2D(input_ch//4, (4, 4), strides=(2, 2), padding="SAME")(x) x = BatchNormalization(momentum=0.9)(x) x = ReLU()(x) x = Deconv2D(input_ch//8, (4, 4), strides=(2, 2), padding="SAME")(x) x = BatchNormalization(momentum=0.9)(x) x = ReLU()(x) x = Deconv2D(3, (3, 3), strides=(1, 1), padding="SAME")(x) out = Activation('tanh')(x) return Model(inputs=inputs, outputs=out) def discriminator_model(input_shape): ch_list = [64, 128, 128, 256, 256, 512] kernel_list = [4, 3, 4, 3, 4, 3] stride_list = [2, 1, 2, 1, 2, 1] inputs = Input(shape=input_shape) x = Conv2D( 64, (3, 3), strides=1, padding='same', input_shape=input_shape )(inputs) for cl, kl, sl in zip(ch_list, kernel_list, stride_list): x = noise.GaussianNoise(0)(x) x = LeakyReLU()(x) x = Conv2D( cl, kl, strides=sl, padding='same', input_shape=input_shape )(x) x = noise.GaussianNoise(0)(x) x = LeakyReLU()(x) flatten = Flatten()(x) x2 = Dense(1)(flatten) act = Activation('sigmoid')(x2) return Model(inputs=inputs, outputs=act)

迷走した結果こんな感じになった. discriminatorにbatchnormalization入れるならreal imageとfake imageを別々のバッチに入れるとよいらしい.

d_loss_real = discriminator.train_on_batch( image_batch, [0.9]*(BATCH_SIZE)) d_loss_fake = discriminator.train_on_batch( generated_images, [0]*(BATCH_SIZE))

学習を安定化させるテクニックにノイジーなラベルを使うとよいとあるので0.9にしてみた.

Dにガウシアンノイズ入れたりしたけど意味あるのかこれ.

訓練データ

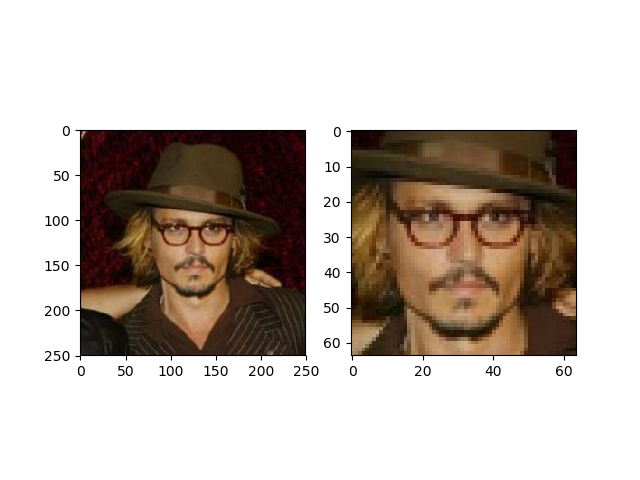

LFWという顔画像を使用. もとは250*250だがopencvのカスケード分類器で顔を切り取って64*64にreshapeして使う. 例えば左のかっこいいおっさんは右になる.

バッチサイズ32で500epoch回す.

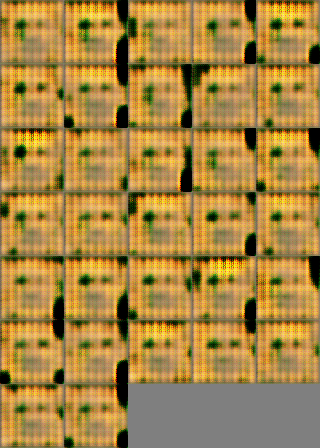

結果

interpolationもしてみた.

次はfeature embeddingについてかこうかな...